It’s not that you use AI; it’s how.

Don’t get me wrong: used properly, AI is an amazing tool. I use it myself daily for everything from idea generation to image creation to summarization and more.

I also use it for search and Q&A. Mostly. Sometimes. With a great deal of trepidation and skepticism.

When I hear people “just” use AI now in place of more traditional tools and techniques, I get worried… really worried.

Using and trusting AI

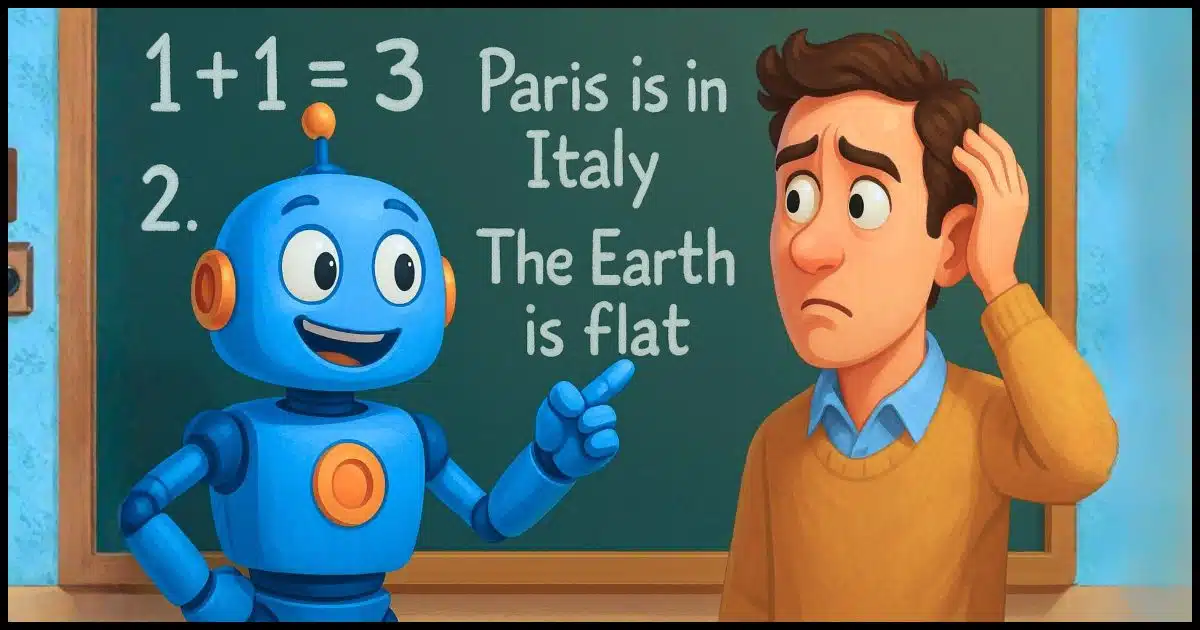

AI can be a powerful tool, but it doesn’t always get things right. Its confidence can fool us into trusting bad answers. Use AI to explore ideas, not as your only source of truth. Stay skeptical, double-check facts, and remember: even smart tools (and people) can be confidently wrong.

AI doesn’t yet deserve your trust

When I say AI here, I’m referring to large language models (LLMs) such as ChatGPT, CoPilot, Gemini, Claude, and a host of others. They’re certainly artificial, but whether they’re an “intelligence” is up for debate, both technical and philosophical1.

To be clear, they don’t “think”. As others have pointed out they’re really nothing more than glorified, immensely powerful, auto-complete. The “answers” you get from AI are nothing more than the words that are most likely to follow the words in your question. There was no thought put into it, just massive amounts of statistical analysis.

Responses from Large Language Models like ChatGPT, Claude, or Gemini are not facts.

They’re predicting what words are most likely to come next in a sequence.They can produce convincing-sounding information, but that information may not be accurate or reliable.2

Nonetheless, they’re amazing. It does feel like they do a much better job of understanding3 my various statements and queries than a traditional search engine. A vague, poorly worded question that might baffle traditional search, for example, can get spot-on results from an AI.

However, understanding my question is completely different from answering it correctly.

It’s easy for us to evaluate what AI gives us and recognize — even be impressed with — its ability to understand what we mean or realize that it completely misinterpreted us.

It’s nowhere near as easy to evaluate the response it gives us. Unless we’re already familiar with the topic at hand, we have no objective way to evaluate whether the answer is correct.

This should scare you.

Help keep it going by becoming a Patron.

Its confidence is misleading

One characteristic of AI that was identified early on was the confidence it exhibited in its answers. It was humorous: AI was known for providing very confident and very inaccurate answers.

While its accuracy has certainly improved, the unwarranted confidence remains. If anything, it’s taken on a new sheen of sycophancy. It not only provides answers with authority, it does so in a way that plays to our egos. It still gives confident answers couched in terms that try to please or suck up to whoever’s asking it.

Sometimes it’s right. I might even say that most of the time it’s right. And yet, it’s still often wrong. Sometimes a little, and sometimes very, very wrong.

With its confidence and eagerness to please, it’s too easy to just assume it’s correct and skip any kind of verification.4

This is another reason misinformation spreads: we assume the source is correct when it’s not.

Where AI Q&A is helpful

I’m not saying not to use AI for questions or in place of search. I do it myself.

But.

I use it to augment what I know, not replace it. For example, I ask AI tech questions all the time. It’s not uncommon for me to just copy/paste a question I’ve been asked into an AI — perhaps because I couldn’t understand the question and AI might, but mostly because it’s a quick way to generate potential answers.

I vet those potential answers. Because this is my area of expertise, I can weed out the right from the wrong, the pragmatic from the dangerous, and what’s applicable from the irrelevant. If I need to, I can take what AI has provided and refine it with more queries — often in the AI, but also in more traditional searches (often including that on my site). This process often generates issues I wouldn’t have thought of or that I wouldn’t have thought of as quickly.

If you’re not familiar with the subject to begin with, it’s terribly easy for AI to lead you astray. I’ve heard from too many people who’ve made a further mess of things simply by blindly following the (no doubt very confident) instructions provided by an AI chatbot.

Skepticism is still required

If you’re going to use AI to research information and answer questions, you must — MUST — remain skeptical of the information it provides. Ignore the confidence and be skeptical of the answer.

Double-check it. Check the references if they’re provided. Pit two (or more) AIs against one another and see how their answers differ.

Use a completely different technique to vet the answer you’ve been given. That could be your own experience, common sense, or more research into the topic at hand.

Just don’t take AI-generated answers at face value unless or until you have something else that would lead you to believe that the answer is correct.

Experience over time may not help

One thing humans do is build trust over time. For example, if you’ve gotten an answer from me that turned out to be helpful, you’re slightly more trusting of me the next time you have a question. This is how trust is built.

I’m not sure trust-growing should apply to AI.

If you get a helpful answer from AI, you’re more likely to go to it with your next question. Whether or not the previous answer should influence your level of trust is complicated.

If it’s in an area closely related to your original question, some additional trust might be warranted. An example might include questions about how to get Windows File Explorer to display things a certain way. It could still be wrong, but the probability is less.

If it’s in a different area, then no additional trust is warranted, period. That it answered a Windows File Explorer question accurately should have no bearing on the answer it might provide about something health-related. These are two completely separate areas of information. (This concept applies to the humans you might ask these questions of as well. Ask me about Windows, but not that suspicious lump on your arm.)

Here’s the problem: When is it really a different area? We don’t always know.

An AI might have ingested more information on Windows File Explorer than on, say, the Windows Event Viewer, device drivers, Windows 11 versus Windows 8, or many other topics. The topics might feel related — they’re all about Windows, in this case — but under the hood, they’re dramatically different areas of information. Once again, that includes your human resources.

It’s much too easy to extrapolate accuracy in one area into areas where that trust remains unwarranted.

This isn’t really new

You’ll note earlier I said, “Skepticism is still required”. That’s because in a very basic way, this shouldn’t be new behavior for us at all.

We’ve long been skeptical of search engine results — even more so in recent years, as those results have been skewed by various competing interests ranging from politics to advertising to sponsorships to SEO-gaming and more.

Apply the same level of skepticism — and perhaps a skosh more — to AI.

Do this

Use AI. Just use it with caution.

In particular, be skeptical of the answers it gives you, and double-check anything that’s even mildly important.

That’s good life advice no matter where you’re getting your answers.

Help me build trust. Subscribe to Confident Computing! Less frustration and more confidence, solutions, answers, and tips in your inbox every week.

Podcast audio

Footnotes & References

1: What does it mean to be “intelligent”, anyway?

2: Via Stop Citing AI

3: Whatever “understanding” might mean.

4: My theory is that when something acts “eager to please”, it’s more difficult to think critically about it because of our instinct not to offend… even though there’s nothing here to be offended. Just my pet theory.

Someone said they wondered who won the Juno in a certain category so they asked AI and got an answer they were skeptical of. A friend asked and AI gave a different answer. Another friend asked AI and got a third answer.

Microsoft and Google give AI answers in their search engines but I never believe them. I follow the links for their sources and check out their sources.

I’ve basically taken your advice before you gave it, i’m in my 50s, and having what is basically a ‘chatty interface to a search engine’ is handy, if you remember that is what it is, with a few added bells and whistles.

Tools like chat GPT can be told specifically to remember your preferences too, for example my settings are ‘if i ask for a bit of specific info without preamble, i want an answer, and i need citations and links, thank you’

Used like that it’s a powerful and amazing tool. But as you’ve said, treating everything it says as gospel, is a user error… 🙂

Leo, I DON’T KNOW anymore what a “traditional search engine” is. Are you talking about Google, or Bing, or one of those types?

Exactly. A traditional search engine returns a list of links to sites where what you’re searching for resides.

So far, I have had no use for CoPilot, ChatGPT, or any of the other AI models. Especially when they all have their hands out to use them. My experience with them has been a couple of free queries, then either wait a month or pay for yet another subscription. Having a fixed income makes me think twice about what I’m willing to spend money on.

I rarely used Google to search, stopped using Bing a couple of years ago in favor of DuckDuckGo and am now using Kagi. I have been able to find what I need by strictly using a search engine. I’m not so pressed for time that I need a summary generated by AI. I’m still capable looking at search results to get the details for what I’m looking for. Unfortunately, some of the results seem to written by people who use AI in their writings to generate more traffic to their sites.

Chat GPT (older data sets) are a handy chatty interface to traditional search, with the caveats Leo gave, these days. It will nag you to upgrade to get the latest and greatest, but you can ignore that. No more limits on queries per day that i’ve run into

When using Chrome, I don’t specifically invoke Gemini; I simply type a question into the Google search bar. Usually, the first response I get is titled “AI Overview.” Is that the type of AI answer you are talking about? I really like those “AI Overviews” and the “Dive deeper in AI Mode.” Do they also have potentially wrong and hallucinatory answers?

Even when you do a normal Google or Bing, or many other search engine search, you get an AI overview at the top of the page along with the sponsored results. As for hallucinations, they are just as common as if on their chat page. Google search uses Gemini, and Bing uses Copilot.

Fortunately, you can scroll past the AI overview and go directly to the search links.

That’s google search. And yes, AI overviews can be in error as well.

I prefer to use the standard search engines, mostly Google, seeing that its on all our work computers, as Bing has had issues. The only AI I’ve used mostly has been Copilot, as this is only one I can use for work, so have used it at home when I’ve needed assistance to rewording correspondence or selection criteria more professionally or just to see if I’m on the right track.

With regards to searching, I usually scoll down to non sponsored results and if they are based in Australia (where I’m from) as those are morelikely be the answers I’m looking. This does depend on what I’m looking. But as you’ve advised AI has its uses including the AI overview. I do have a quick read of the first few lines to see if I’m on the right track with me terminology or questions for searching for unfamiar items.

Leo,

Your assessment about AI is spot on. Thank you for all your warnings!

I thought you might find this article about how AI is “trained” interesting:

Puppeteers of Perception: How Artificial Intelligence (AI) Systems are Designed to Mislead.

It can be found at the Journal of American Physicians and Surgeons. https://jpands.org/jpands2903.htm

Here’s a short excerpt: ” The most blatant and obvious programmed AI system lying occurs most regularly in discussions about climate change, social issues, politics, elections, anything controversial, and in general anything even remotely related to any of the aforementioned.” One company that was found doing this is Google.

Yes. The old computer law, GIGO, Garbage In Garbage Out. The Internet is loaded with garbage, and LLMs (better known as AI) train on that garbage along with the real meat. AI will never work until they can distinguish real from fake. I’m not hopeful.

PEOPLE can’t tell real from fake, even before AI came along. AI is training on what people do. Falling for misinformation means it’s doing an excellent job of mimicking being human.

Looks like AI is passing the Turing Test 😉 . It’s just as dumb as humans.

They’re not so much lying as they are just parroting all the misinformation they’ve been trained on.

I’m an atypical ai user. I use the browser version of copilot as a first-reader for pieces of my manuscript. That way, I’m not driving my friends crazy asking them to read this brand new piece “hot off the press.” I also use it as a sounding board – I’m an external processor, so “talking it out” – or in this case, typing it out – helps me figure out what I was really trying to say.

I have to constantly remind it that I do NOT want it to write for me, but I will ask it to look up statistics, compare two products, tell me what the internet says about a product, etc. It can research faster than I can, and it does math faster than I can, so I will happily use it for those types of things. And yes, I check its results, and the links that it points me to. I also realize that it gives me the answers I want, but I’m ok with that, given what I use it for.

Thanks Leo. Probably the first really mature, insightful and intelligent article / commentary I’ve yet seen on “how’ to use AI productively.

Clearly, much will change going forward, but, your “instruction manual” above certainly makes a huge amount of sense at this particular point of AI development.

Thanks!

Almost everything you say could be applied to human celebrity influencers!

In general, this is very good advice. Ultimately, we have to take responsibility for our own opinions – wherever we get the input.

But, having said that, it is possible to ask your questions in a way that constrains AI, keeps it on topic, and reduces the likelihood of error. It’s call “prompting” and it’s going to be an important skill in the future https://effortlessacademic.com/ai/.

(How do I make that link live?)

Proper prompts are essential to good AI results, but the Web is full of garbage, and you still might ask the right question and get the wrong answer. The quality of the output is limited by the quality of the data the AI is trained on or pulling from. And services that offer to solve that for you are often frauds like most SEO services.

I developed a huge scepticism of AI when I asked if a certain musician performed in a television series. The answer comprehensive, definitive, and 100% wrong. I suspected it was wrong because not many people sound like Peter Gabriel and I found the correct answer using convention search engines. As you point out, the AI answer expresses no doubt, uncertainty, or equivocation, all of which are highly underrated.

Very helpful article.

Another concern I have is that AI will erode our ability to research and think (like using a calculator and losing our mental math capability).

And the opportunity for large-scale manipulation of public opinion is massive.

Hence I like your advice to be sceptical and double-check the information. This will help to maintain our researching and critical thinking skills.

It’s so easy to become lazy with AI! Let’s all remind each other to use it critically, and not overuse it!

Did you know that the current administration wants to “save money” by using AI to authorize and/or deny certain Medicare claims? (Some states have already rolled out tests)

So, you’d rather have your clams denied by a person rather AI? 😉